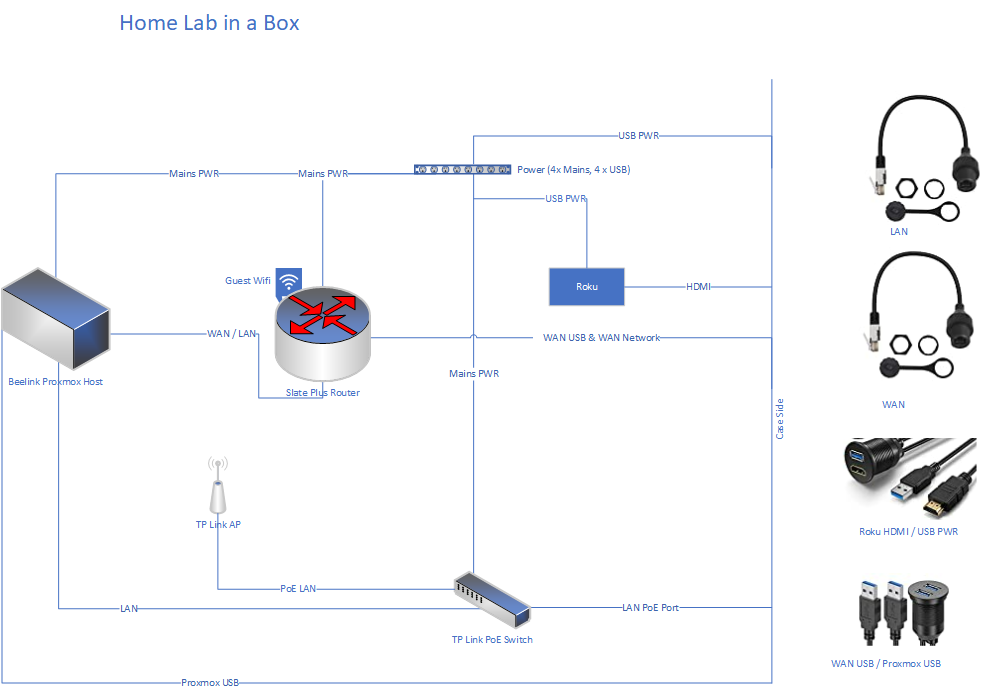

It’s a lab in a box

Something I’ve wanted for a while is a mobile lab. Something in a flight case. Mainly because it would be cool, but also it would be nice to have a fully mobile setup to demo or use for small migrations.

I don’t have a budget for a lab in a box that will just sit there so the components needed to be reusable. I recently came across Beelink mini PC’s (I bought one for Sophie for her new Cricut machine) and I’ve been thinking about upgrading the caravan infrastructure. So I thought I would combine the two and design and build a lab in a box.

The main components:

- Beelink Mini PC U59 Pro (N5105, 16Gb RAM, 256Gb M2 and 2Tb SATA drive, Dual Gb Network)

- GL-Inet Slate Plus (same chipset as the “cirrus” I use)

- TP Link Gb PoE Switch

- TP Access Point

The plan is to present a number of ports to the edge of the case. Extending beyond the walls of the case should allow more connectivity to the internal and external interfaces.

This month I will be building the “IT” bits of the build and hopefully next month buying the case and the ports.

How is it reusable?

- Slate Plus – Router – Can be used as a travel router with no changes.

- Beelink U59 Pro – Server – Can be used as a Proxmox lab with no changes.

- TP Link – PoE Switch / Access Point – Probably best left configured for lab.

- Roku – Media Player – Can be used with any Wi-Fi (or combined with Slate Plus for travel).

How could it be extended?

With the external ports there are lots of options. You could add a NAS for migrations, plug in Broadband for a demo, tether it to your phone for a rural / mobile deployment (via WAN USB), join it to a conference / hotel wireless network for demos / watching films, download your plex library to the server for media on the go or even deploy it as an office in the box for DR / BCP, smarthome demo lab with Home Assistant, I’m sure there are more ideas to come!

Once it’s all setup I’ll publish a new post with pics.

Update... It's a lab in a bag

I have finished the build and I am pleased with how it came out. Unfortunately for my budget lab I couldn’t spring for a case at the moment (v2 and I have the panel mounts!). Here it is completed! Just some cable management to sort out (possibly false bottom in the case).

Intel 5xxx and Proxmox

Just a quick one today. I started playing with Proxmox on a Beelink U59 Pro, there were some VM instabilities and it turns out I wasn’t alone.

Proxmox Forum: https://forum.proxmox.com/threads/pve-keeps-rebooting-beelink-u59.112074/

Uh oh! I thought I would be stuck turning this into a Win Mini PC, however, it turns out there are some CPU issues with the Intel 5xxx processors, here.

I had a look through the forums and it looks like it is an issue with Kernel 5.15. Proxmox have issued a 5.19 kernel which appears to resolve the issue, this has since be superseded with a 6.1 Kernel.

Forum post here.

It’s easy to install though

apt update

apt install pve-kernel-6.1

reboot

Update – 27/02/2023

Turns out there is slightly more to the issue than a kernel patch.

You need to patch the microcode for the processor.

Add the following to /etc/default/grub (GRUB_CMDLINE_LINUX_DEFAULT) intel_idle.max_cstate=1 processor.max_cstate=1

Update Grub (update-grub)

Update Intel-Microcode

edit /etc/apt/sources.list add non-free to

deb http://ftp.debian.org/debian bullseye main contrib non-free

deb http://ftp.debian.org/debian bullseye-updates main contrib non-free

deb http://security.debian.org bullseye-security main contrib non-free

update by

apt update

apt install intel-microcode

Replacing ZFS Drive

Canary Light & Power Cuts

This post is going to be quite wordy! We’ve had a number of powercuts recently and like any smarthome my house takes time to reboot.

There have been a couple of issues when the power comes back, the first is some of my smartlights don’t support power restoration states, so they come on as soon as power comes back.

This got me thinking, then I came across the “canary light”. This is a device that always comes on when the power resumes (just a standard tuya bulb in my case).

When this light turns on home assistant then triggers an automation that turns off all the lights (after 2 mins, to make sure that everything is back up) that don’t support power restoration. It also emails me to tell me that power has been restored. I have tested it a couple of times and it works really well. Thankfully none of the devices that power on are in bedrooms or waiting for 2 minutes in the middle of the night would definitely be bad!

I also purchased a UPS (I know, I know, but I’ve been spoiled by good power for a long time) and hooked it up to my server, using NUT it will shutdown when the battery gets low and it will email me.

I have an old TP-Link WR802N which can be used as an access point. I have connected this to a port on my server for power and used a spare NIC in my proxmox server and added it as a bridge. This gives me wifi in the event of power lose. It’s small and is running really well off the PC’s USB port. If you are going to do this you will need to make sure you get the v1 version as it’s power requirements are a lot lower than later versions.

That’s wifi and lights / smarts covered off.

The final part is my internet connection, this comes in the other side of the house to my study. Currently my vigor “modem”, sits next to the phone socket and then I use powerline to get it across the house. As you will have probably guessed, power line doesn’t work when there is no power. So I will be running a new cable round the house to deliver the phone line to the study and then I will move the vigor to the study!

Job nearly done!

Postfix From Rewrite

Quick article about re-writing the from address from postfix. First install the libsasl2-modules.

Add the following to main.cf

sender_canonical_classes = envelope_sender, header_sender

sender_canonical_maps = regexp:/etc/postfix/sender_canonical_maps

smtp_header_checks = regexp:/etc/postfix/header_check

</pre class="code highlight" lang="shell"></span class="line" lang="shell">Then create the files and their contents

/etc/postfix/sender_canonical_maps

/.+/ newemail@domain.com

/etc/postfix/header_check

From:.*/ REPLACE From: newemail@domain.com

Reload Postfix and test

mail -s “Test Subject” user@example.com < /dev/null

Check the mail logs to see if it’s successful. This trick is really useful if you use a 3rd party to relay email and they require some form of domain or address authentication.

Check_RSS

An old plugin back to life!

Below is the check_rss.py script for pulling RSS feeds into your monitoring platform. I’m currently using ITOpenCOCKPIT, although I have used it with nagios before that.

You do need a couple of dependencies. On ubuntu 22.04 these are:

- python3

- python3-feedparser

#!/usr/bin/python3

"""

check_rss - A simple Nagios plugin to check an RSS feed.

Created to monitor status of cloud services.

Requires feedparser and argparse python libraries

python-feedparser

on Debian or Redhat based systems

If you find it useful, feel free to leave me a comment/email

at http://john.wesorick.com/2011/10/nagios-plugin-checkrss.html

Copyright 2011 John Wesorick (john.wesorick.com)

This program is free software: you can redistribute it and/or modify

it under the terms of the GNU General Public License as published by

the Free Software Foundation, either version 3 of the License, or

(at your option) any later version.

This program is distributed in the hope that it will be useful,

but WITHOUT ANY WARRANTY; without even the implied warranty of

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

GNU General Public License for more details.

You should have received a copy of the GNU General Public License

along with this program. If not, see <http://www.gnu.org/licenses/>.

"""

import argparse

import datetime

import sys

import feedparser

def fetch_feed_last_entry(feed_url):

"""Fetch a feed from a given string"""

try:

myfeed = feedparser.parse(feed_url)

except:

output = "Could not parse URL (%s)" % feed_url

exitcritical(output, "")

if myfeed.bozo != 0:

exitcritical("Malformed feed: %s" % (myfeed.bozo_exception), "")

if myfeed.status != 200:

exitcritical("Status %s - %s" % (myfeed.status, myfeed.feed.summary), "")

# feed with 0 entries are good too

if len(myfeed.entries) == 0:

exitok("No news == good news", "")

return myfeed.entries[0]def main(argv=None):

"""Gather user input and start the check"""

description = "A simple Nagios plugin to check an RSS feed."

epilog = """notes: If you do not specify any warning or

critical conditions, it will always return OK.

This will only check the newest feed entry.

Copyright 2011 John Wesorick (http://john.wesorick.com)"""

version = "0.3"

# Set up our arguments

parser = argparse.ArgumentParser(description=description, epilog=epilog)

parser.add_argument("--version", action="version", version=version)

parser.add_argument(

"-H",

dest="rssfeed",

help="URL of RSS feed to monitor",

action="store",

required=True,

)

parser.add_argument(

"-c",

"--criticalif",

dest="criticalif",

help="critical condition if PRESENT",

action="store",

)

parser.add_argument(

"-C",

"--criticalnot",

dest="criticalnot",

help="critical condition if MISSING",

action="store",

)

parser.add_argument(

"-w",

"--warningif",

dest="warningif",

help="warning condition if PRESENT",

action="store",

)

parser.add_argument(

"-W",

"--warningnot",

dest="warningnot",

help="warning condition if MISSING",

action="store",

)

parser.add_argument(

"-T",

"--hours",

dest="hours",

help="Hours since last post. "

"Will return critical if less than designated amount.",

action="store",

)

parser.add_argument(

"-t",

"--titleonly",

dest="titleonly",

help="Search the titles only. The default is to search "

"for strings matching in either the title or description",

action="store_true",

default=False,

)

parser.add_argument(

"-p",

"--perfdata",

dest="perfdata",

help="If used will keep very basic performance data "

"(0 if OK, 1 if WARNING, 2 if CRITICAL, 3 if UNKNOWN)",

action="store_true",

default=False,

)

parser.add_argument(

"-v",

"--verbosity",

dest="verbosity",

help="Verbosity level. 0 = Only the title and time is returned. "

"1 = Title, time and link are returned. "

"2 = Title, time, link and description are returned (Default)",

action="store",

default="2",

)

try:

args = parser.parse_args()

except:

# Something didn't work. We will return an unknown.

output = ": Invalid argument(s) {usage}".format(usage=parser.format_usage())

exitunknown(output)

perfdata = args.perfdata

# Parse our feed, getting title, description and link of newest entry.

rssfeed = args.rssfeed

if rssfeed.find("http://") != 0 and rssfeed.find("https://") != 0:

rssfeed = "http://{rssfeed}".format(rssfeed=rssfeed)

# we have everything we need, let's start

last_entry = fetch_feed_last_entry(rssfeed)

feeddate = last_entry["updated_parsed"]

title = last_entry["title"]

description = last_entry["description"]

link = last_entry["link"]

# Get the difference in time from last post

datetime_now = datetime.datetime.now()

datetime_feeddate = datetime.datetime(

*feeddate[:6]

) # http://stackoverflow.com/a/1697838/726716

timediff = datetime_now - datetime_feeddate

hourssinceposted = timediff.days * 24 + timediff.seconds / 3600

# We will form our response here based on the verbosity levels. This makes the logic below a lot easier.

if args.verbosity == "0":

output = "Posted %s hrs ago ; %s" % (hourssinceposted, title)

elif args.verbosity == "1":

output = "Posted %s hrs ago ; Title: %s; Link: %s" % (

hourssinceposted,

title,

link,

)

elif args.verbosity == "2":

output = "Posted %s hrs ago ; Title: %s ; Description: %s ; Link: %s" % (

hourssinceposted,

title,

description,

link,

)

# Check for strings that match, resulting in critical status

if args.criticalif:

criticalif = args.criticalif.lower().split(",")

for search in criticalif:

if args.titleonly:

if title.lower().find(search) >= 0:

exitcritical(output, perfdata)

else:

if (

title.lower().find(search) >= 0

or description.lower().find(search) >= 0

):

exitcritical(output, perfdata)

# Check for strings that are missing, resulting in critical status

if args.criticalnot:

criticalnot = args.criticalnot.lower().split(",")

for search in criticalnot:

if args.titleonly:

if title.lower().find(search) == -1:

exitcritical(output, perfdata)

else:

if (

title.lower().find(search) == -1

and description.lower().find(search) == -1

):

exitcritical(output, perfdata)

# Check for time difference (in hours), resulting in critical status

if args.hours:

if int(hourssinceposted) <= int(args.hours):

exitcritical(output, perfdata)

# Check for strings that match, resulting in warning status

if args.warningif:

warningif = args.warningif.lower().split(",")

for search in warningif:

if args.titleonly:

if title.lower().find(search) >= 0:

exitwarning(output, perfdata)

else:

if (

title.lower().find(search) >= 0

or description.lower().find(search) >= 0

):

exitwarning(output, perfdata)

# Check for strings that are missing, resulting in warning status

if args.warningnot:

warningnot = args.warningnot.lower().split(",")

for search in warningnot:

if args.titleonly:

if title.lower().find(search) == -1:

exitwarning(output, perfdata)

else:

if (

title.lower().find(search) == -1

and description.lower().find(search) == -1

):

exitwarning(output, perfdata)

# If we made it this far, we must be ok

exitok(output, perfdata)

def exitok(output, perfdata):

if perfdata:

print("OK - %s|'RSS'=0;1;2;0;2" % output)

else:

print("OK - %s" % output)

sys.exit(0)

def exitwarning(output, perfdata):

if perfdata:

print("WARNING - %s|'RSS'=1;1;2;0;2" % output)

else:

print("WARNING - %s" % output)

sys.exit(1)

def exitcritical(output, perfdata):

if perfdata:

print("CRITICAL - %s|'RSS'=2;1;2;0;2" % output)

else:

print("CRITICAL - %s" % output)

sys.exit(2)

def exitunknown(output):

sys.exit(3)

if __name__ == "__main__":

result = main(sys.argv)

sys.exit(result)

Digital Portfolio Office

I wouldn’t say that the transformation had stalled, or that it wasn’t welcome, but we had some issues finding teams that were willing to “go next”…

That’s how it started. How could we get the business to engage with the transformation programme, how could we get them involved?

In the early days of the programme we ran “buzz” days, things to get teams excited about the new ways of working in Office 365. The sexy stuff was done, everyone was working in the brave new way. The business had taken to it, success!

Our CRM and ERP elements were slightly different, and stalled. CRM was going well and the team were delivering to new services, ERP was live for finance, but how do we get to the hard to reach areas?

We invited Chris from Cavendish Wood to come and spend some time with us. He worked with the digital transformation team and management team and we came up with a plan. The digital portfolio office (DPO).

The DPO was headed up by the CEO and we appointed a business analyst and a project manager. The DPO is like a modern technical suggestion box, no idea to stupid and ideas taken from across the organisation. We were keen that this didn’t appear as another digital / IT initiative and the CEO wrote a couple of blog posts to promote it.

It started slow, had we done the right thing? Then we started implementing some of the ideas, giving feedback to every submission, the flood gates opened, ideas coming from all over the business. Some tweaks to existing systems, some multi-month projects and some custom power app development.

It’s been nearly 2 years since we setup the DPO and in that time we have delivered a number of solutions, it’s been a success.

I’d recommend a digital suggestion box to any company, make sure you have executive sponsorship and that you feedback or do something with every suggestion. Finally don’t be afraid to ask for help, sometimes it’s hard to see the wood from the trees, just a few days with a external consultant can offer massive returns.

Yummy, yummy Pis – June 2022 Update

Yummy, yummy Pi's - June 2022 Update

I’ve decided this will become a running update of the Pi’s I am using and what I am doing with them. Updates will be posted to the top of the page.

June 2022 – Update

Even more has changed, you can now run proxmox and OPNSense (both limited) on pi’s. I hope both these projects mature, as OPNSense Pi at the caravan would be amazing and a proxmox quorum pi at home would help a lot!

Ironically the Pi shortage has got worse and isn’t likely to improve for another year or so, I no longer us Pi’s behind TVs so I have managed to get some back into rotation

So here we go:

- Pi 4 2Gb – OctoPi

- Pi 4 2Gb – Pi Sync

- Pi 4 2Gb – Pi KVM

- Pi 4 2Gb – Caravan HA

- Pi 4 2Gb – Caravan Pi

- Pi 4 2Gb – Frame Pi

- Pi 4 4Gb – CCTV Pi

- Pi 400 – Study Pi

- Pi 3b + – Wildlife Cam

Spare Pi’s

- 2x Pi 2

- Pi 3+

- Pi 4 8Gb

- Pi 4 1Gb

- Pi Pico

- 2x Zero WH

- Zero

- Zero 2

May 2021 – Update

A lot has changed in home Pi world… ESX for ARM has been released and I’ve been testing this, it works and is stable. Hopefully OPNSense will come to the Pi natively and then there will be some interesting opportunities to run Pi firewalls, I have looked at OpenWRT on the Pi, but prefer the completeness of the xSense ecosystem. I’ve upgraded a couple of Pi’s due to performance issues. I have also migrated the Pi 400 to SSD and it’s a lot quicker. I have been keeping an eye on SD card performance and have settled on Samsung Evo Plus and SanDisk Ultras / Extreme. There appears to be a shortage of 2Gb Pi’s at the moment, I need an additional one to replace the Pi in the kitchen which I am currently using for the CCTV Pi.

A full list below:

- Pi 4 1Gb – CCTV Pi (upgraded from a Pi 3+ for more streams (including 3D printer and new Eufy Cams)

- Pi 4 2Gb – OctoPi (3D printer control)

- Pi 4 2Gb – Pi Sync

- Pi 4 2Gb – Pi KVM

- Pi 4 2Gb – Caravan HA

- Pi 4 2Gb – Caravan Pi

- Pi 4 4Gb – Bedroom Kodi

- Pi 400 – Study Pi

Spare Pi (s)

- 2x Pi 2

- 2x Pi 3+

- Pi 4 8Gb (currently testing ARM ESXi)

- Pi Pico

- Zero WH

- Zero

- December 2020 – Update

In addition to the Pi’s below, I now have two more in use

- Pi 2 – Backup Pi – Using rsync and rclone to manage all my backups locally and to sync to OneDrive for Business.

- Pi 4 2Gb – Pi KVM – Find out more in this post

I have also managed to purchase a Pi 2 v1.2 to go on the Pi versions board. This completes my collection of historical Pi Bs. When the next version of Pi’s come out the Pi 4’s will slowly be retired to the board!

I am also working on a project with Pi Zero WH’s to create a multizone audio system using Volumio, this project will make use of HifiBerry’s popular DAC Hats as well as some custom integration work. I currently have 3 Pi “audio zones” and am awaiting the hats to begin testing.

Spare Pi(s)

- Pi 2, Pi 3+, Pi 4 8Gb

- Pi Zero WH

- Pi 2 1.2 ready for mounting

Retired Pi(s)

- Pi 3+ – First home assistant server migrated to new proxmox host

Why no love for the Pi A or Compute module? Although I have a good collection of old Pi’s you may notice that I don’t have any Pi A or Pi Compute modules on the list. This is because I don’t use them! I’ve never had a use for the compute modules. I do have a Pi A in a wildlife camera, but this currently isn’t being used. I love the Pi B and Zero form factors which is why I use them the most, if I have a project that ever uses the other form factors, I may well collect the back catalogue of those too!

Original Post – November 5th

From the moment they were announced I knew that the way I did computing at home had changed. Ideal as test boxes, development, media players and now even mini ESX servers! I’ve used them for many things…

The Pi’s I currently have in use are:

- Pi 4 1Gb – Kitchen LibreELEC

- Pi 4 2Gb – 2nd Device in Lego Room

- Pi 4 4Gb – Bedroom LibreELEC

- Pi 400 – 2nd Device in Study

- Pi 3+ – CCTV Viewer

- Pi 3+ – Garage

- Pi 3+ – Home Assistant

I have used them for other projects in the past including getting started with Home Assistant, mini ESXi Server, custom automations, OSMC media player, Plex Server, learning things with Ali, Wildlife Cameras, the list goes on. I hope they are around for a long time to come!

In the gallery below you can see the latest Pi 400, my display of Pi’s from the original Pi to the Pi 3 B+ (with space for the Pi4 1, 2, 4Gb version… the 8Gb version will start a new board). Next are my Pi’s ready for use (Pi Zero WH, Pi 3 B+ and Pi 4 8Gb), I also have a Pi 2 in the cupboard should I need something older to play with and yes that is a ZX Spectrum +2 behind them. Finally my Pi Zero Board up to the latest Pi Zero WH.

New Home Smarts Update

It’s been a while since I did a blog post. I didn’t realise how long until I saw the last update! Well since May 2021, I’ve moved house, tweaked my technology stack and added some new bits.

This is what the house looks like now:

- Alexa – Voice Control

- Flic – Physical Control

- Home Assistant – The glue

The technologies now in use are:

| Type | Manufacturers | Staying |

|---|---|---|

| Plugs / Light Strips / HA Zigbee | Sonoff | Yes |

| Lights / Buttons / PIR | Hue | Yes |

| Heating | Tado / Tado TRVs | Yes |

| TV Remote | Logitech | No – EOL |

| Button / H&T / PIR | Shelly | Yes – Testing |

| Cameras | Eufy | Yes |

| Cameras | MotionEye | Yes |

| Lights / Plugs | Kasa | Yes – Caravan |

| Buttons | Flic | Yes |

| PIR | ESPHome | Yes – Limited |

| Christmas Lights / Bulbs | Tuya | Yes |

| Automation | SmartBot | Yes – Limited |

| Ambilight | Govee | Yes – Limited |

| AirCon | Sensibo | Yes – Limited |

Everything is currently working well and there are very few issues.

In time I will write another post about some of the technologies and routines that I now have. Including the whalesong motion sensor when you’re in the cloakroom!